Reference Architecture for SQL Server 2016 In-Memory OLTP

Solution Overview

The Microsoft In-memory OLTP technology provides hosting service providers the opportunity to support following key business scenarios:

- Increasing OLTP workload performance with memory-optimized tables by up to 30 to 40 times.

This powerful technology takes advantage of large amount of memory and dozens of cores to increase the performance of OLTP operations. This document describes the following:

- Managing in-memory tables and indexes in memory and in storage and their reference architecture implementation guidance

- Getting better performance with natively compiled stored procedures and concurrency, when accessing memory-optimized table.

These scenarios are the focus of this document. Each scenario's architecture is described from an architectural point of view.

Intended Audience

The audience for concepts explained in this document are the Infrastructure Consultants, Architects and Database Administrators of hosting service providers. Hosting service providers who are considering utilizing their in-house hardware and software investments towards the opportunity to increase bottom line revenue. Along with in-house hardware Hosting service providers can also utilize the Microsoft Hybrid Cloud Platform in incremental stages to offer ancillary services around In-Memory OLTP to benefit their consumers.

Architectural Overview

The Reference Architecture gives an overview of how in-memory technology fits in the overall solution architecture of a service provider. At a high level we can visualize this as three layered architecture which includes Infrastructure, Platform and Application. Each layer has its own importance and association.

Infrastructure

The components within infrastructure layer represents the technical capabilities of service provider which are required to host on-premise platform, software and applications (if any). The infrastructure layer of a service provider facilitates IaaS services to consumers to host in-memory enabled databases on virtualized environment as well as in the form of a dedicated physical hardware, in either scenario the compute power - physical memory and compute cores required to manage in-memory workload are dedicated and can be scaled up or scaled down on the need basis. At the same time in a given database not all tables are in-memory workload eligible, all such tables are stored in storage area as disk based tables. The network component of this layer represent the physical network switches, routers, firewalls etc. and is used to establish two-way communication between infrastructure, software, platform and application.

Platform

Platform layer works in conjunction with infrastructure and application to provide database service, this service offers hosting of both in-memory enabled and disk based databases. Consumers' databases get hosted on this platform and in-memory tables can be enabled based on consumers' requirements. Enabling and configuring in-memory table in a given database is very straight forward, two key things which we have to be keep in consideration are availability of compute resources and whether an OLTP table is eligible for in-memory workload or not.

Application

Application layer works in conjunction with infrastructure and platform and provide data to end users for their analysis. There could be a variety of applications which might want to gain access to data from an in-memory OLTP database and there are certain considerations one has to keep in view when connecting to in-memory OLTP databases.

Desktop

Accessing in-memory OLTP database from a desktop application is possible and there are no special changes or considerations one has to make. It is seamless to access in-memory OLTP database from a desktop because under the cover everything this is taken care at the database platform side and from an application perspective it is as good as connecting to any standard SQL database. This means be it a .NET thick client or a Java or any other application as long as they can connect to SQL databases they would be able to leverage in-memory OLTP database features.

Mobile

Accessing in-memory OLTP database from mobile devices such as phones and tablets is seamless and transparent. Mobile apps can connect to SQL databases and leverage in-memory table technology. This holds good for different mobile platforms like iOS, Android and Windows.

A Mobile device such as phone and tablet can connect to an in-memory OLTP database using APIs or a direct mode in either cases from a development point of view there is no change as in-memory OLTP technology works at server side. However high performance of the system becomes instantly visible based on query response time.

Web

Accessing in-memory OLTP database from Web apps is easy and seamless just like accessing any other database in SQL Server. Web application developed on variety of platform like .NET as well as third party platform like Java, Python, PHP etc., can leverage in-memory OLTP database seamlessly because in-memory OLTP technology works at server side, this means web developers from any platform need not to worry about any changes into their SQL Server database access method. Same hold true for web based reporting applications, these can connect to in-memory OLTP database without any change and can leverage high performance response back from the database.

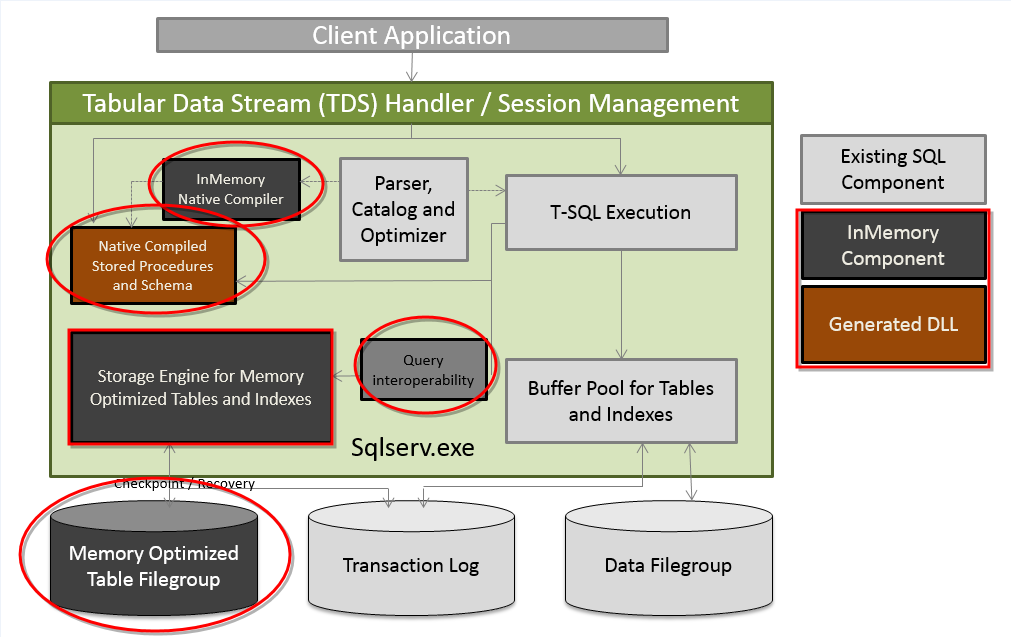

SQL Server engine with the In-Memory OLTP components.

At technology level, in-Memory OLTP is integrated with the SQL Server relational engine, and can be accessed transparently using the same interfaces.

The client application connects to the TDS Handler the same way for memory-optimized tables or disk-based tables, whether it will be calling natively compiled stored procedures or interpreted Transact-SQL. You can see that interpreted Transact-SQL can access memory-optimized tables using the interop capabilities, but that natively compiled stored procedures can only access memory optimized tables.

Architecture Design & Primary Scenarios

With the growth in hardware technology, primary memory size of server systems has grown at a rapid and at the same time it became affordable, organizations in various business scenarios are using In-memory OLTP databases to accelerate performance of their transactional systems by up to 30 to 40 times. These requirements are influencing Microsoft consumers having highly transactional workloads up to Terabytes to Petabytes of data to design their application with memory optimized tables to deliver faster business insights with more than 100x faster queries and reports with supported security and disaster recovery.

Hosting Service Providers

Hosting service providers can leverage the innovative solutions discussed in this document by speeding up their existing and new consumers OLTP workload with memory-optimized tables. This provides up to 30 to 40 times faster performance compared to traditional disk based tables and thereby delivering faster business insight with faster querying and reporting. Service provider can now offer high performance transactional workload support to their consumer without sacrificing practically on any features which consumer have access to if their transactional workload is running on disk based tables. In-memory OLTP workloads are future ready and service providers can offer ancillary services including hybrid architecture to their consumer on the need basis.

High-Performance Workload Scenario

A high-performance workload scenario is a scenario where consumer comes with specific asks to service provider where low or negligible data latency is expected from a highly transactional database and the data size itself is huge, for such a scenario there are many parameters which must be considered from an in-memory reference architecture point of view like:

- Identification of highly transactional tables

- Indexing considerations

- Query performance best practices

- Resource pooling

- Application access and integration

- Security Considerations

- Backup and recovery

Identify In-Memory Workload Tables

In-memory OLTP performs best for certain specific types of workload.

This can help identify if you have a workload that can be potentially optimized using SQL Server's In-Memory Optimization. The main workload areas that are most likely to see the greatest benefits from using In-Memory OLTP are summarized below:

| Workload Type | Examples | Main Benefits of In-Memory OLTP |

| High Data Insert Rate | Smart Metering System Telemetry | Eliminate contention Minimize I/O logging |

| Read Performance and Scale | Social Network Browsing | Eliminate contention Efficient data retrieval Minimize code execution time CPU efficiency for scale |

| Compute Heavy Data Processing | Manufacturing supply chains or retailers | Eliminate contention Minimize code execution time Efficient data processing |

| Low Latency | Online Gaming Platforms Capital Markets | Eliminate contention Minimize code execution time Efficient data retrieval |

| Session State Management | Managing sessions (i.e. user requests, etc.) for a heavily-visited website | Eliminate contention Efficient data retrieval Optional I/O reduction/removal |

NOTE: In SQL Server 2016, the recommendation is not to have more than 2 TB of data in memory-optimized tables.

Index Design Considerations

Specifying the right indexes can dramatically improve query performance. Each memory-optimized table must have at least one index and must have a declared primary key, which could then be supported by the required index. For a better performance it is important to determine when to use these indexes.

Determining Which Indexes to Use for a Memory-Optimized Table

Non-clustered Index: When converting a table from disk-based to memory-optimized, best practice is to start with non-clustered indexes that mirror the index keys of the clustered and non-clustered indexes on the disk-based table. Also, when unsure which index to pick, use a non-clustered index.

Hash index: If a given index is only used for primary/unique key enforcement or point lookups, consider converting that index to hash, to optimize point lookup and insert operations.

Columnstore Index: Columnstore indexes are optimized for analytics queries that scan and/or aggregate over large sections of data.

Limitations:

Hash Indexes do not support point lookup operations based on leading index key columns; Queries must include equality predicates for all index key columns to benefit from the point lookup performance of hash indexes.

Query Performance Considerations

In-Memory OLTP features works internally to provide improved performance with memory-optimized tables. This increased performance is obtained by making changes in below main design areas compared with the previous disk-based storage for our relational data:

Dual nature: A memory-optimized table has a dual nature: one representation in active memory, and the other on the hard disk. Each transaction is committed to both representations of the table. Transactions operate against the much faster active memory representation. Memory-optimized tables benefit from the greater speed of active memory versus the disk. Further, the greater nimbleness of active memory makes practical a more advanced table structure that is optimized for speed. The advanced structure is also pageless, so it avoids the overhead and contention of latches and spinlocks.

No locking: The memory-optimized table relies on an optimistic approach to the competing goals of data integrity versus concurrency and high throughput. During the transaction, the table does not place locks on any version of the updated rows of data. This can greatly reduce contention in some high volume systems.

Row versions: Instead of locks, the memory-optimized table adds a new version of an updated row in the table itself, not in tempdb. The original row is kept until after the transaction is committed. During the transaction, other processes can read the original version of the row.

- When multiple versions of a row are created for a disk-based table, row versions are stored temporarily in tempdb.

Less logging: The before and after versions of the updated rows are held in the memory-optimized table. The pair of rows provides much of the information that is traditionally written to the log file. This enables the system to write less information, and less often, to the log. Yet transactional integrity is ensured.

Resource Pool Design Considerations

When many Users attempt to modify data in a database at the same time, modifications made by one adversely affect those of another which slows down the performance. To prevent this, In-Memory OLTP engine designed for high concurrency uses an optimistic concurrency control mechanism to eliminate locking delays which increases the performance almost 20 times.

Applications whose performance is affected by engine-level concurrency, such as latch contention or blocking, improve significantly when the application is migrated to in-memory OLTP. Memory-optimized tables do not have pages, so there are no latches and hence no latch wait. If your database application encounters blocking issues between read and write operations, in-memory OLTP removes the blocking issues because it uses optimistic concurrency control to access data. The optimistic control is implemented using row versions, but unlike disk-based tables, the row versions are kept in-memory. Since data for memory-optimized tables are always in-memory, the waits due to I/O path are eliminated. Also, there will be no waits for reading data from disks and no waits for locks on data rows.

Optimistic multi-version concurrency control removes the need for the latches and locks that are necessary in disk-based tables to ensure transactional integrity, but can cause contention and blocking for highly concurrent workloads.

Application Access and Integration

From an application perspective accessing any in-memory OLTP tables within a database is as good as you are accessing any other regular disk based tables. This means technically there is no changes involved in connection strings if an application backend database tables are migrated to in-memory workload type or if you are planning to integrate your new application with any in-memory tables OLTP database. This brings are lot of relief to service provider as well as consumer because access to in-memory OLTP database is transparent.

Security

With SQL Server 2014, if the Consumer say 'My application contains business-sensitive data that needed extra protection, so security is more important than the performance' in that case In-memory OLTP is not the way to go, unfortunately. But with SQL Server 2016 Consumer's application can be fast as well as fully secured as it supports TDE (Transparent Data Encryption) with which memory-optimized tables data on disk will be encrypted. TDE can be enabled on a database that has In-Memory OLTP objects. In-Memory OLTP log records are encrypted if TDE is enabled.

This allows for greater security along with the performance enhancements provided by memory-optimization.

Security Considerations:

- While a re-encryption scan for a database encryption operation is in progress, maintenance operations to the database are disabled. You can use the single user mode setting for the database to perform the maintenance operation.

- You can find the state of the database encryption using the sys.dm_database_encryption_keys dynamic management view.

- In TDE, all files and filegroups in the database are encrypted. If any filegroups in a database are marked READ ONLY, the database encryption operation will fail.

- If a database is being used in database mirroring or log shipping, both databases will be encrypted. The log transactions will be encrypted when sent between them.

NOTE: Encrypted data compresses significantly less than equivalent unencrypted data. If TDE is used to encrypt a database, backup compression will not be able to significantly compress the backup storage. Therefore, using TDE and backup compression together is not recommended.

Backup and Restore

Memory optimized tables are backed up as part of regular database backups so you don't need to do anything special to manage backups of databases with memory optimized tables. However backup of memory optimized tables will be much larger than compared to actual memory footprint. A full backup of a database with one or more memory-optimized tables consists of the allocated storage for disk-based tables (if any), the active transaction log, and the data and delta file pairs (also known as checkpoint file pairs) for memory-optimized tables. We can also do differential backups of databases with memory optimized tables.

In the similar way the restore of databases with memory optimized tables is similar to disk based tables, only addition to this is that memory optimized tables must be loaded into memory before database is available for user access.

Summary

In this document we studied how In-memory OLTP can dramatically improve throughput and latency on transactional processing workloads and can provide significant performance improvements for Consumer's applications. We have seen the workload types which can benefit the most in terms of performance when In-Memory OLTP is used. Furthermore, this is the purpose of memory-optimized tables, index designing and natively-compiled stored procedures in efficient data access and improved performance.